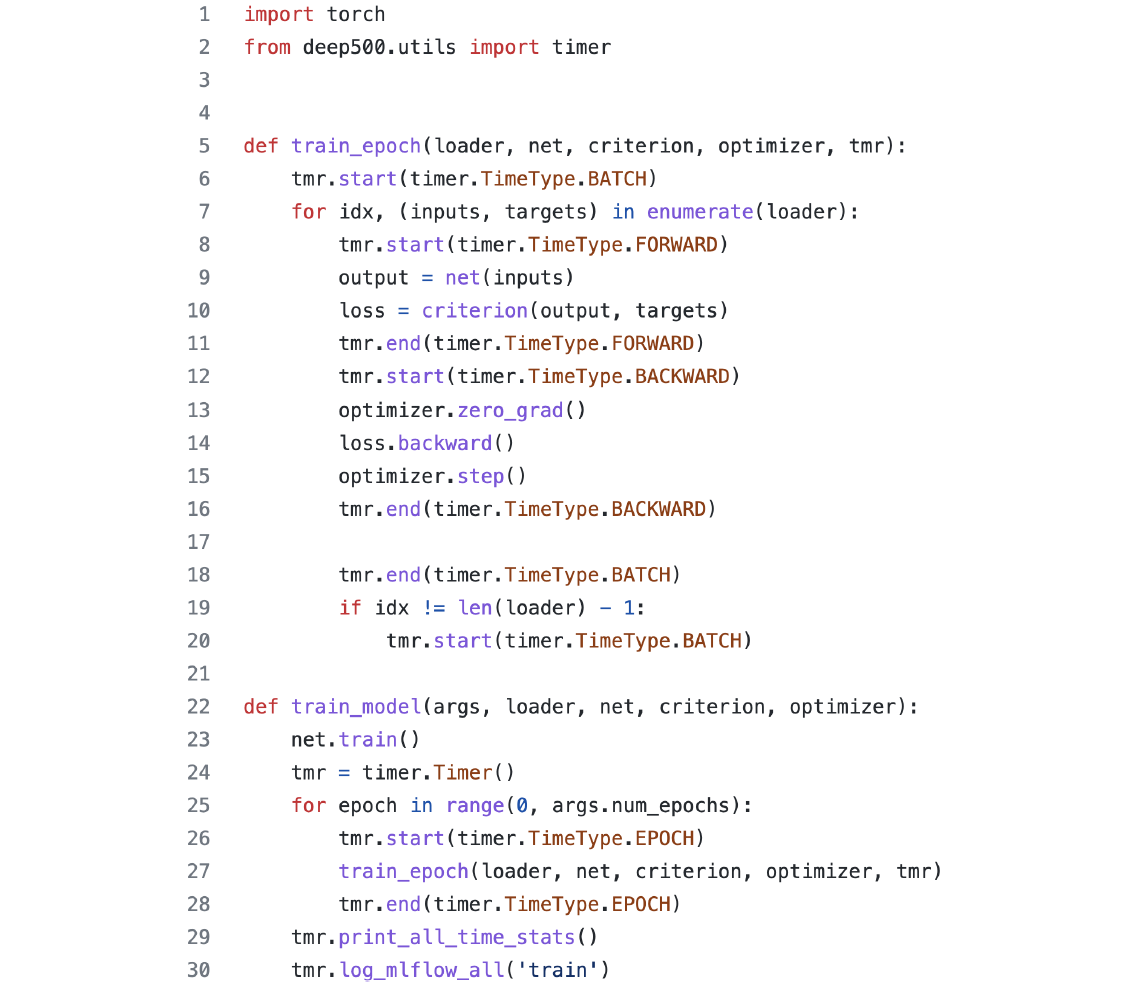

We extended Deep500 with a generic Timer interface, which supports marking the beginning (start()) and end (end()) of a region to be timed and measuring the time elapsed between the beginning and end of the region. This allows a developer maximum flexibility to deploy both fine and coarse-grained timing. Each region is annotated with a key that describes the region being timed, to facilitate later analysis, and regions with different keys may be nested or otherwise overlap to

break down timing further.

We define the following default keys that an application may use:

Many deep learning applications utilise GPUs to accelerate training. However, this poses a challenge for timing, as the GPU component of the application typically executes asynchronously with respect to the CPU host; indeed, for best performance, synchronisation points should be minimised. Therefore, timing a region on the CPU (e.g., forward propagation) may give misleading results, as only the time spent on the CPU to launch the asynchronous kernels is measured, rather than the complete time spent performing computations.

To address this, the Timer class is generic, and supports pluggable timing implementations. The default implementation utilises standard high-resolution CPU performance timers. We also implemented a region timer that utilises CUDA events, a lightweight timing infrastructure provided as a standard part of the CUDA runtime for measuring elapsed time on GPUs. (A functionally identical API is available for the HIP runtime on AMD GPUs.) These events only synchronise with the CPU when explicitly requested, allowing this overhead to be amortised over many timed regions, minimising any overhead to the application.

In the current implementation, due to technical reasons, GPU-side timing may have additional overhead in frameworks besides PyTorch. This is because other frameworks (e.g., TensorFlow) do not expose the underlying CUDA streams they launch GPU kernels on, making timing difficult. We plan to address this in an updated version of the benchmarking tools. We also note that the generic timing implementation makes it simple to add support for timing on other accelerators which may be explored by MAELSTROM.

The current timing infrastructure is agnostic as to whether an application is being trained in a distributed manner. At their discretion, the user may time either only a single process among multiple, or time all processes independently and separately log the results (e.g., to detect load imbalance). For typical deep learning applications utilising distributed data-parallel training, the frequent global synchronisation means that timing on a single process is representative. The timing infrastructure can additionally be used to specifically measure the time spent in communication operations, so an application can determine whether it is communication-bound.

In an updated version of the benchmarking tools, we plan to include additional support for timing distributed training, particularly in the context of model-parallel training.

The existing Deep500 implementation provided a basic CPU wallclock metric that can be integrated into a Deep500 recipe, and its events interface can allow one to time specific regions. Our above Timer interface adds additional support for GPU timing and logging (discussed below). However,

many applications in D1.3 are not currently implemented as Deep500 recipes; indeed, one is using scikit-learn, which is not supported by Deep500. To ease the transition to Deep500 and allow applications to get immediate performance feedback, the entire timing infrastructure is entirely framework-independent. A user can directly add timing to their existing implementation without any reimplementation or additional dependencies.

An important limitation we discovered during the benchmarking process is that many applications are using “prebuilt” training loops (e.g., the model.fit() paradigm in TensorFlow/Keras). These approaches do not provide a way to obtain fine-grained benchmarking results (e.g., of forward,

backward, or I/O time). While these libraries typically provide a “callback” mechanism for their training loops, the granularity is limited to the batch level. While this can be circumvented by rewriting the training loop, this is a large burden, and we plan to investigate methods that will enable finer-grained timing despite these limitations.